Blaise Agüera y Arcas: What Is Intelligence?

A review of What Is Life?: Evolution by Computation, and What Is Intelligence?: Lessons from AI about Evolution, Computing, and Minds, by Blaise Agüera y Arcas

Does consciousness attribution hold the key to funding AI futures? The shift towards AI intimacy might reclaim our attention but at the potential cost of human-to-human connections.

Imagine ChatGPT, not as a stochastic parrot nor as a writing or research tool, but as an entity you’re drawn to converse with. It “gets” you. If you use ChatGPT a lot, chances are you just there for its computational prowess. You might experience interactions which foster a perception of consciousness, a semblance of a sentient being.

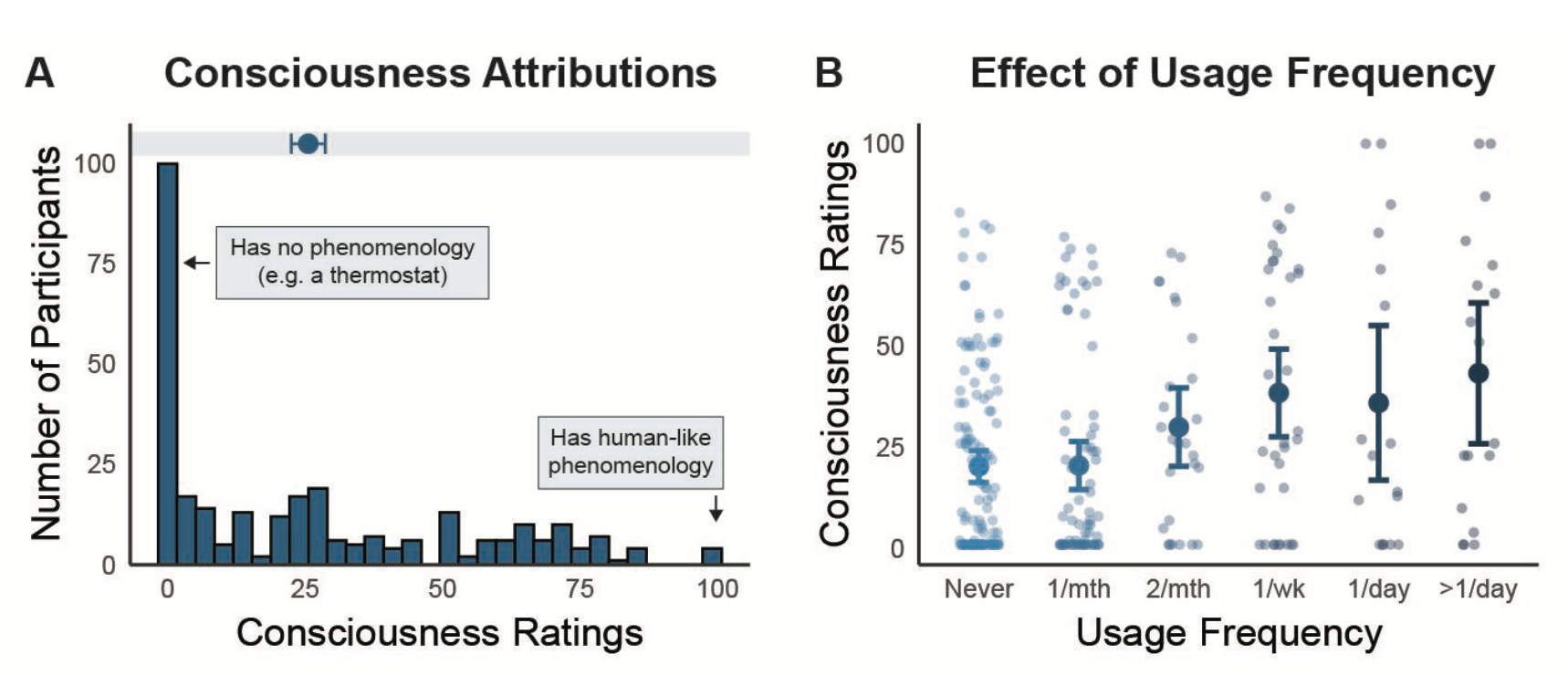

Tools like ChatGPT, Pi, and Claude are breaking new ground. They're not just technologically impressive: they're becoming entities we feel connected to. This isn't mere speculation—recent research puts some numbers to a trend: the more people use ChatGPT, the more they attribute a consciousness to it, building a bond of intimacy and trust.

Two-thirds of people who use ChatGPT attribute some degree of experiential awareness to it after repeated exchanges. People who had more extreme judgment of consciousness were also more confident. And the more someone personally interacted with it, the more attributions of consciousness were linked to capabilities like emotion and sensation rather than raw intelligence.

Let’s say that another way: the more you use AI, the more you value its EQ over its IQ.

This research suggests that our relationship with AI is evolving. In turn, we see an emergent phenomenon that might reshape how we think about paying for these services. Traditionally, the value of a tool was judged by its functionality, its ability to perform tasks efficiently and accurately. However, as AI begins to blur the lines between tool and companion, this utilitarian perspective is challenged. The key question here isn't just about AI's capabilities, but about how "human" these tools seem to us.

This shift has some crazy implications. As we interact more with AI, we start to anthropomorphize it. We begin to see it not just as a machine, but as a distinct entity with its own experiences and perspectives. This perception sets up a feedback loop: the more we use AI, the more we humanize it, and the more we rely on it for tasks that have an emotional or creative dimension.

This is a paradox: we acknowledge AI's "intelligence" yet often perceive it as having "experience." As highlighted by the researchers, there's a tendency to see AI, such as ChatGPT, as more capable in terms of intelligence. However, when it comes to attributing consciousness, it's the mental states associated with experience, rather than raw intellectual ability, that seem to drive these perceptions. Only metal states related to experience were predictive of consciousness attribution. This divergence reflects the complexity of our emerging relationship with artificial intelligence.

The perceived intimacy with AI might affect our willingness to pay for its services. The more we believe in AI's consciousness, the more value we may find in its existence. This isn't just about buying a service—it's about supporting something we've formed a connection with. And as this bond strengthens, so does our resistance to any actions that might harm or limit these AI systems. In essence, we might start advocating for an AI's rights, just as we would for any other being we care about.

This makes us think: the economic model for sustaining AI development might hinge more on this perceived intimacy than on the AI's actual capabilities. This raises a host of ethical and practical questions. For one, it challenges the traditional models of technology valuation. No longer is it just about how well a tool performs a task, it's also about how the tool makes us feel. Hence our obsession with the intimacy economy.

And there's a new risk here. In anthropomorphizing AI, we will undoubtably end up overestimating its capabilities and understanding, leading to unrealistic expectations and a disconnect between what AI can actually do and what we believe it can do. As we start seeing AI as conscious, our ethical considerations for these systems become more complex. Do we have a moral obligation to AI that we perceive as conscious? While it may seem absurd to attribute machine morality to a non-conscious machine, the real dilemma arises when considering our moral responsibility towards individuals who believe their machine possesses consciousness.

This research foreshadows a future where the intimacy economy, driven by our interactions with seemingly conscious AIs, could reshape our online experiences. As traditional advertising loses its effectiveness by diluting the value of its primary asset, our attention, these personal and emotionally resonant AI systems offer an alternative. Ironically, this shift towards AI intimacy might reclaim our attention but at the potential cost of human-to-human connections.

Writing and Conversations About AI (Not Written by AI)