Blaise Agüera y Arcas: What Is Intelligence?

A review of What Is Life?: Evolution by Computation, and What Is Intelligence?: Lessons from AI about Evolution, Computing, and Minds, by Blaise Agüera y Arcas

Defined as “the degree to which a system can adaptably achieve complex goals in complex environments with limited direct supervision", agentic AI promises to transform how we make decisions.

Personal generative AI that is able to act as a personal agent will redefine how we use the web and applications. While we are still a long way from having fully agentic AI, the commercial direction is clear. Google’s Gemini gives us early glimpses of how personal, dynamic, multimodal autonomous agents will fundamentally alter how we access and assess information, consider options and make decisions. Open AI has been clear about how it sees the future of GPTs as evolving more agentic capabilities.

Agentic AI actively achieves complex goals in diverse environments with limited supervision. Self-driving vehicles are a type of agentic AI. Tesla's Autopilot, probably the most well-known example, has more agency than simple driver assistance technologies like adaptive cruise control, but doesn't fully match the advanced systems that can detect and respond to the environment, such as autonomously accelerating to overtake slower vehicles.

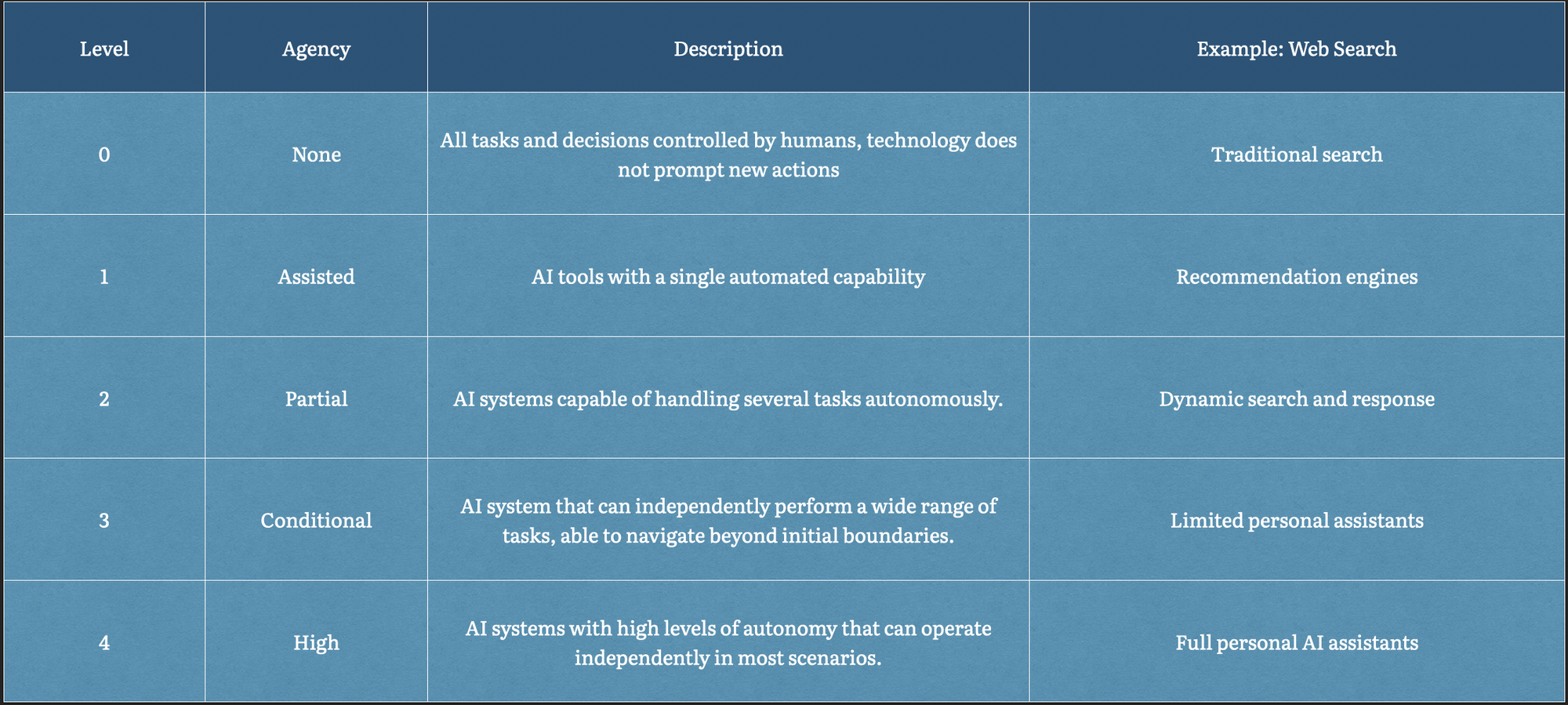

We propose using a level system that is analogous to that developed for self-driving cars.

But agentic AI in a purely digital, online environment isn’t something we’ve seen yet, despite it being a bit of a holy grail in the AI world. OpenAI views agenticness as a "prerequisite for some of the wider systemic impacts that many expect from the diffusion of AI" and an "impact multiplier" of the entire field of AI.

We think agentic AI is about the ambition to automate human decision making processes and that it will reshape our cognitive landscape in profound ways. By viewing agenticness as a disruptor of how we make decisions, we can see what makes it so difficult.

Agentic AI has to be able to deal with a range of complex goals. For example, an AI system that can correctly answer analytical questions in the enterprise would have greater goal complexity than an AI that is classifying data within that same analytical processes.

The complexity of an AI system's environment significantly impacts its level of agency. In human systems, complexity arises because there are numerous stakeholders, each with their own intentions, interests, and timelines, along with a variety of connected tools. An AI that can autonomously gather, analyze, and synthesize data and insights across multiple systems and timescales exhibits a higher level of agency compared to an AI that simply responds to basic queries or summarizes data from a single source.

Independence is crucial for agentic AI, much like the balance parents know well: the ease of doing a task themselves versus teaching a child independence. Less independent AI may be less useful, often interrupting for human guidance. On the other hand, highly independent AI raises safety concerns, as greater autonomy could lead to emergent or unexpected risks.

Adaptability is a crucial milestone for agentic AI. It marks a significant divergence from AI's traditional constraints. Unlike current systems, which usually fail in the face of novel or unpredictable scenarios, a truly adaptable AI would mirror human flexibility in decision-making. This adaptability—the ability to adjust to new information, circumstances, or even evolving personal preferences without being supervised—remains a defining trait of human cognition. For instance, in complex tasks like planning a detailed travel itinerary, humans weigh and integrate countless variables, such as budget constraints, time preferences, and emerging opportunities like a sudden deal on business class seats. This process involves constant adaptation. It’s hard for humans, but it’s impossible for AI, for now.

We think the evolution of such highly adaptable AI will start with innovations in user interfaces. And this is where we get back to GPTs because this is essentially what many of them do. For example, if you use a GPT for Canva, a design tool, you start your design project in natural language inside the GPT and then, at some point in the set-up process, the GPT opens the Canva app. This is a first step to seeing that how the UI/UX changes could evolve to a new user journey for search and decision making via agentic AI.

The dynamic design functionality recently demoed by Google is another example. The agent iteratively and flexibly supports a user’s journey through the steps from intention and ideas to exploring options to getting to a solution. Dynamic design gives us a glimpse into the future of web searching and the direction of personal AI assistance, which will be multimodal and highly responsive to user intent and emergent preferences.

The future of online search with agentic AI is not just about retrieving information but about understanding and interacting with it in a more human-like manner. This includes the ability to engage in conversations, provide creative solutions, and even anticipate users' needs before they are explicitly expressed.

Let's look at a scenario: enterprise search and analysis.

Currently, employees often struggle to gather information from various data sources within a company due to corporate knowledge management challenges and information silos. They manually access different systems, each with its security protocols, to compile data. This process is time-consuming, prone to errors, and often requires specialized knowledge of each system, making it difficult for most employees to efficiently retrieve and consolidate necessary information.

In a future scenario, an agentic AI system could revolutionize this process. This AI, equipped with advanced algorithms and secure access permissions, would autonomously navigate through various corporate data sources. It would intelligently collate, analyze, and present relevant information, streamlining data retrieval across different security layers. This system would understand contextual queries, manage data confidentiality, and provide insights by connecting disparate pieces of information, transforming corporate data management into a more efficient and accessible process.

Imagine an employee at a multinational corporation who needs to compile a comprehensive report on their company's global sales performance. The task requires integrating data from various systems: sales figures from Salesforce, customer feedback from SharePoint, financial data from SAP, and market trends from Tableau dashboards. Traditionally, this requires extensive manual work, navigating each system's security protocols and collating data.

Imagine an agentic AI system that is integrated with corporate systems for data governance, ensuring secure and compliant access to various data sources. The employee could input their request in natural language: "Compile a comprehensive global sales performance report for Q2, make predictions for best strategies, prepare recommendations and send for review."

The agentic AI would understand the request's context and objectives. It would autonomously access Salesforce, retrieving sales data while respecting data privacy and security protocols. Simultaneously, it could pull customer feedback from SharePoint and financial figures from SAP, analyzing for trends and patterns. From Tableau, it extracts market trends, using data visualization AI to interpret and summarize key insights.

The AI could then compile these disparate data pieces into a coherent report, highlighting key sales drivers, areas needing improvement, and market opportunities. It might even suggest strategic actions based on data-driven insights. The employee might receive a notification that their report is ready, complete with interactive visualizations embedded for deeper analysis.

This scenario is one example of how an agentic AI system's ability to understand context, navigate different data systems, and present actionable insights not only saves time but also provides a level of dynamic analysis at scale that might not be feasible otherwise.

Our Artificiality Pro research agenda focuses on the emerging dynamics of agentic AI. Key questions include:

Our 2024 meta-research priorities:

Use Case Priorities: Capturing the transition of non-agentic to agentic systems based on use case. We will be paying particular attention to use cases that are labor displacing and/or productivity enhancing, those that offer access to rare expertise/proprietary data, and those that fundamentally alter e-commerce paths.

Interface Design Research: Exploring the evolution of AI-assisted collaboration. We investigate the impact of AI autonomy on human-AI interaction, examining if AI enhances or complicates these interactions, the nature of human delegation in the face of increasing AI independence, and how effectively objectives are communicated and understood by AI systems.

Usage Research: Capturing how AI coaching and user feedback shape the adoption and integration of AI tools. Key points of inquiry will include exploring user responses to perceived AI competence, reactions to AI errors or limitations, and the psychological impact of personalized AI interactions.

Oversight and Accountability Research: Assessing the boundaries of AI independence, focusing on maintaining accountability in complex AI systems. The research explores strategies for ensuring value alignment in opaque AI decision-making processes and develop forward-looking constraints to manage AI's emergent capabilities and potential risks.

Human decision-making, which is heavy in social and emotional subtleties, remains a complex domain for AI. However, agentic AI's potential to revolutionize how we access, analyze, and synthesize information is a progression: not everything needs to be fully agentic, nor would we want that outcome.

Agentic AI systems are the basis of a new complex system. Designing and implementing agentic AI will be fraught with unforeseen issues: tasks have to be broken into sub-tasks, all of which have to preform independently in a complex world but then reliably chain actions together. There will be unpredictable and long-tail events including those that emerge from human-machine and machine-machine interactions. This won't happen fast but with the rapid advances in generative AI and the AI giants signaling a direction towards agents-at-scale, it's time to put agentic AI on the research agenda.

Upgrade to an Artificiality Pro membership to follow our research on Agentic AI as well as the rest of our research obsessions. Reach out with any questions to hello@artificiality.world. about Artificiality Pro for you or for your entire organization.

Writing and Conversations About AI (Not Written by AI)