Blaise Agüera y Arcas: What Is Intelligence?

A review of What Is Life?: Evolution by Computation, and What Is Intelligence?: Lessons from AI about Evolution, Computing, and Minds, by Blaise Agüera y Arcas

Our obsession with complexity as a route to making sense of AI.

Key Points:

If the world seems like it’s getting more complex you’re not imagining it. Humans make the world complex. The internet has been the primary driver of complexity in recent years. As we networked ourselves we increased the complexity of human interaction. Digital networks connect humanity via our data and interactions in ways which defy our evolved intuitions—unfathomable and inscrutable in scale, speed, and scope.

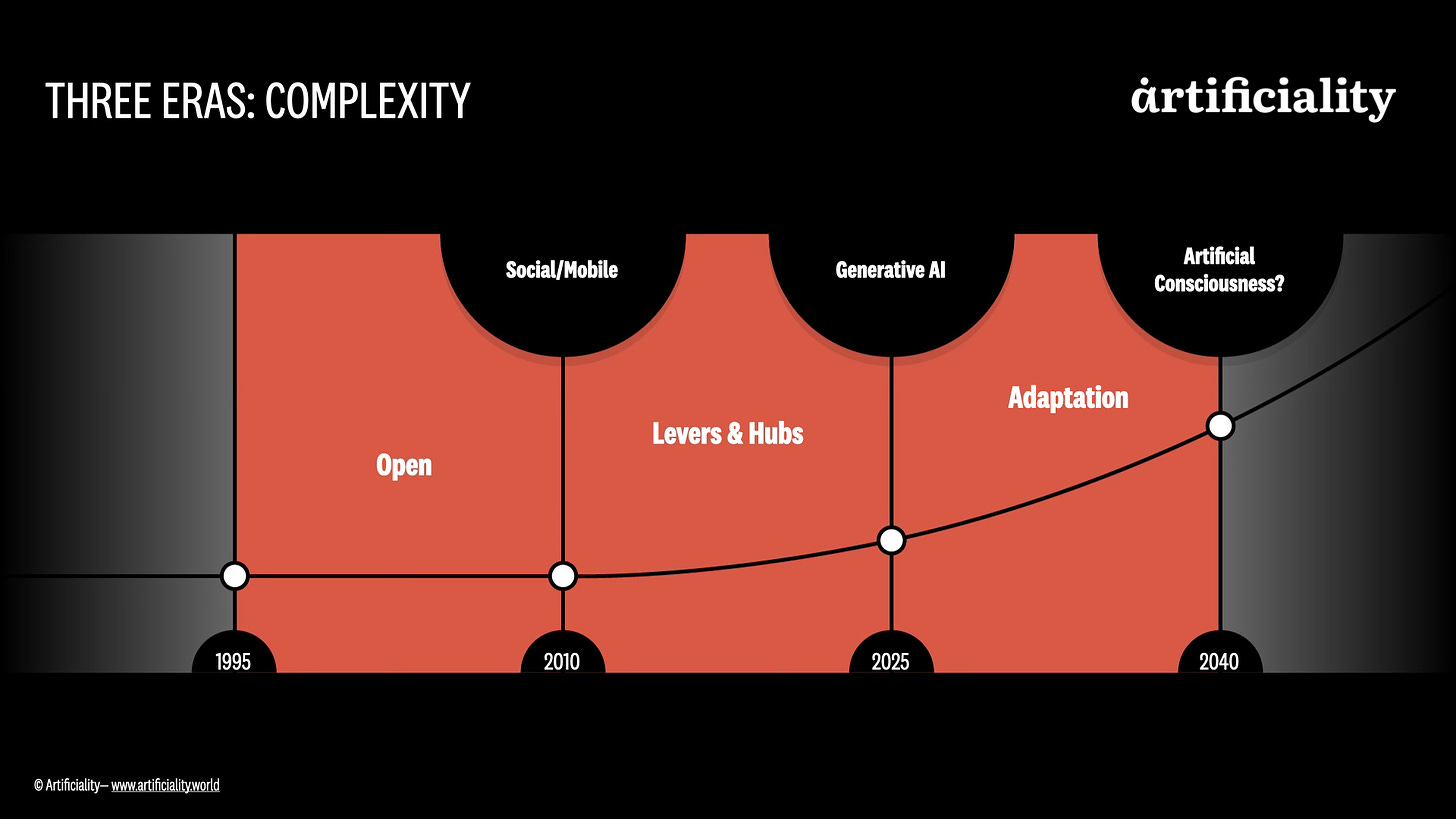

Understanding digital complexity requires historical perspective. Initially, the internet's openness revolutionized information access, promising liberation from traditional knowledge gatekeepers. However, this freedom also led to information overload. A complexity mindset might have predicted the broader implications of this openness, especially as we moved to the internet's next phase: networks. Here, the focus shifted to connections, hubs, and the influence they wield. Initially, we didn't foresee how network dynamics would empower influencers and how algorithms would amplify the biggest, most outrageous, the most attention seeking nodes. Now, as generative AI emerges, a complexity mindset reveals its potential to enhance systemic agency. Just as openness defined the internet's early days and networks characterized the social era, adaptation will likely be pivotal in the generative era.

Complexity is both weird and foreign, familiar and normal. One thing it is not is a bigger version of complicated. Complexity is interconnected, social, non-linear. Inventing an electric car was complicated—new technologies and designs. Implementing a system of self-driving cars? Now that’s complex. People behave in unexpected ways and their response to error is fundamentally social. How about building a social network? Complicated—a lot of difficult engineering. But running a healthy social network is one of the more complex tasks that humans undertake today.

Let’s go deeper. Building an electric vehicle involves intricate engineering and coordination of various components—batteries, electric motors, and power electronics—each with its own set of challenges. Battery technology requires balancing energy density with safety and longevity—a puzzle where each piece must fit perfectly. The motor design must be optimized for efficiency and performance, and power electronics need to be robust enough to handle the demands of an EV. These are complicated tasks due to their technical difficulty and the requirement for precision, but they are fundamentally predictable and can be systematically addressed.

On the other hand, deploying a self-driving car system is complex. It involves navigating an unpredictable and constantly changing environment. The system must process vast amounts of data from sensors and cameras to make real-time decisions, considering variables like pedestrian behavior, other vehicles' actions, weather conditions, and road work. Each of these elements is uncertain and dynamic, creating a network of interdependencies that can change in a split second. This complexity means that outcomes are not easily predictable, and the system must continuously learn and adapt. The complexity is further compounded when considering the regulatory environment, ethical implications, and societal acceptance, making the deployment of self-driving systems a paradigmatic example of complexity in action. What emerges are new behaviors. For example, cyclists who “game” the hesitancy of a self-drive vehicle. And new accident modalities, such as higher rates of crashes with emergency vehicles, whose drivers expect certain types of avoidance behaviors from human drivers that are different with self-driving cars.

Complexity has unique features that science is beginning to get a handle on. Once you see the world through complexity concepts, you can’t go back. You can share insights across different modes because complex systems exhibit common features. You become sensitized to how flawed it is to be a committed reductionist, at how it is not fruitful to look only at specific elements in a complex system because what matters is how the system behaves as a whole.

This is the core insight that underpins our obsession with complexity: we will never understand generative AI if we only look at the component parts—a user, a model, a use case, a process—we have to look at the behavior of the system as a whole. And we have to appreciate that it is the interactions between these components that matter as much as, if not more than, the individual things themselves. Sometimes this is obvious: the culture of a company isn’t the sum of the personalities of all the individuals, it is a result of how the individuals respond to each other, to their customers, and to their stakeholders.

Fortunately, complexity science provides us with insights for navigating change. It describes the dynamic interconnectedness of systems—how actions intertwine in counterintuitive ways. The principles of complexity offer a multifaceted lens for understanding phenomena in artificial intelligence, acknowledging the interplay of both emergent patterns and reductionist elements in the fabric of interconnected uncertainties. Complex frameworks help us to be more agile sensemakers.

Our obsession with complexity was borne out of deep curiosity for the workings of the natural world. We are fascinated by what it means to be a living being that has a purpose: to stay alive, thrive, decide. Ecosystems are complex systems: they are diverse, adaptive, and interconnected.

The most important feature of complex systems is the phenomenon of emergence: the whole is greater than the sum of its parts. Emergent properties arise at the whole ecosystem level, which cannot be predicted by studying individual components in isolation. Ecosystems have tipping points, unpredictable rapid transitions to new states, a feature that increasingly matters to our very survival. Responses in ecosystems are often not proportional to changes in inputs. Feedback loops can stabilize or amplify changes in an ecosystem.

But we are also fascinated by the non-living artificial intelligences around us and how they have already altered our lives, most recently via social media. Now generative AI adds another dimension to complexity of the digital-physical world. It represents what physicists call a phase change. Just as ice changes to water as it warms, generative AI is a sudden and significant shift in AI’s capabilities and behavior. With each model step—say, from GPT3 to GPT4—we see large and unpredictable increases in capability. And we have to adapt.

Generative AI increases the agency in the system. Models will gain autonomy, have their own ideas, make their own decisions, and have a mind of their own. They will insinuate themselves into workflows, decisions, and our intimate, private ways of thinking. We will respond to them as partners, even perhaps as if they are conscious themselves. We won’t always know of what they are capable. Perhaps they have intentions of their own. Or perhaps their design doesn’t afford us accurate intuitions. Either way, counterintuitive interactions will create the ultimate digital-physical emergent system.

For instance, as personalized AI tutoring systems become widespread in education, they may yield the unintuitive effect of enhancing human teachers' impact. While seemingly contradictory, this emergence results from AI exponentially expanding transferable context-fungible content and iterative instruction. Teachers gain capacity to focus on things that cement learning that would otherwise be overlooked—say, working on a student’s confidence. That student’s engagement and success increases so they feel more confident using the AI and also more confident asking more of the teacher. The positive feedback cycle between human educators, AI tutors, and learners creates an emergent system which is greater than the sum of its parts.

Emergence introduces a profound and radical new form of uncertainty. The system is fundamentally dynamic and unpredictable. Humans struggle with uncertainty. The uncertainty that comes from complexity is particularly hard for us deal with. Radical uncertainty undoes our mental models, forcing us to admit we have limited insight or control over the unfolding future. This is anxiety-provoking but is also a space for learning, growth, and opportunity.

Ignoring complexity is no longer an option. Forecasts of the future based on the past—always flawed yet often useful—will no longer be a good enough approximation because complex behaviors are not predictable. Past data is the food that AI feeds on and some analysts suggest the easy food of the internet has been consumed. AI spews the masticated guts of our already-lived digital selves bite by bite. It will soon eat its own sweets as well as its shit. We will need humans to inject freshness and transformative novelty.

How this unfolds is anyone’s guess but we propose that it will a complex collaboration between humans and machines. There are multiple roles for humans in a complex generative AI system. The role of the entrepreneur is to be an actor in the system so their individual vision can emerge. They have an insight for how to intervene, a feeling for how to nudge the system to where they want it to go. Just as life’s increasing complexity begets more complexity, artificial intelligence makes our digital world more complex.

Consider how recommender systems like those on Netflix or Spotify fueled the rise of niche content creators on online platforms. As AI personalization better matched people with highly tailored content, it enabled individuals and small teams creating specialty products to find audiences at scale. This proliferation of tailored offerings then provided richer training data, empowering the AI to get even better at surfacing niche appeals. More creators emerged in an ongoing feedback loop, as the platform's capabilities expand through the data of its rising complexity. Initial AI drives specialization, which begets complexity that enables even greater personalization—illustrating how each AI advance unlocked new, profitable possibilities.

Generative AI is already a partner in invention, innovation, and learning. But this is only the very beginning. Our obsession with complexity is founded on a desire to think differently about the vast high-dimensional information spaces that AI can help us access. Picture an ecosystem of enterprise AI solutions that adapt models to clients' unique needs by learning from the proprietary data of the firm and from every good (or bad) decision made by managers in the past. As more precise models emerge, digitizing specialized expertise, tacit knowledge, and cultural norms, firms gain confidence to make better decisions. This cycle then improves the core generative models and each iteration catalyzes the next, enabling fresh insights into what makes a good decision.

Applying a complexity lens can help us clarify pivotal unknowns as generative AI interacts with society. What self-reinforcing loops might accelerate discovery? Will decentralized coordination emerge beyond institutional control? How might entrenched systems adapt to rapid advancements? Where exactly are tipping points past which the trajectory of our societies is irreversibly altered?

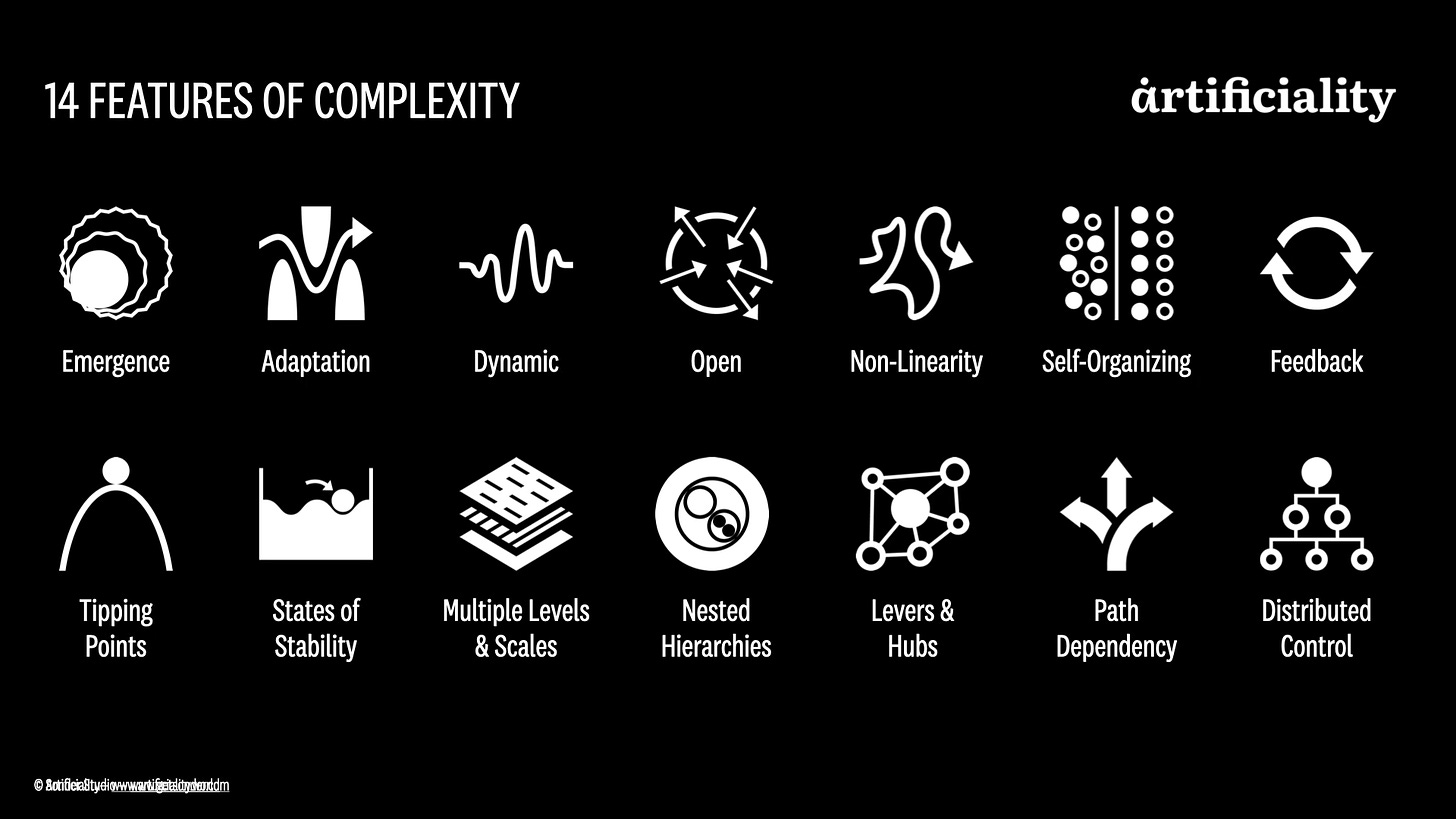

We examine generative AI through the lens of 14 features of complexity. You’ll see us apply these ideas as a way of offering insight into the behavior of our human-machine system. Don’t worry—we will be explaining these as we go! We believe that complexity science will give us better ways to conceptualize AI and help us develop our intuitions for how machines change our world.

Note: If you’re interested in digging further into complexity, listen to our podcast interview with David Krakauer, the President of the Santa Fe Institute, the leading institute on complexity science.

Writing and Conversations About AI (Not Written by AI)